2. Model building

A Bayesian statistical model is specified by the likelihood model (also called observational model) and prior model. This can be expressed as the joint probability density of the data, \(x\), and model parameters, \(\theta\), such that

We perform inference by conditioning on the data and calculating expectation values of the resulting posterior distribution. Recalling Bayes’ theorem

we note that the results of our inference are completely dependent on the assumed model. Bayes’ theorem is not some magical guarantee, and if our model does not represent the actual data generating process our inference will yield results that are meaningless in practice. Even worse, if we are not careful, a misspecified model may produce results that “look good” but are far from the truth - leading us to draw the wrong conclusions from our analysis.

Our workflow begins with building our first sensible model as a starting point, that we can later check and iterate upon to make sure our inferences are useful. The first model doesn’t need to be perfect, and in fact should be as simple as possible while still including the scientific phenomenon of interest. This process is what we will focus on here.

Going from a scientific question to a statistical model

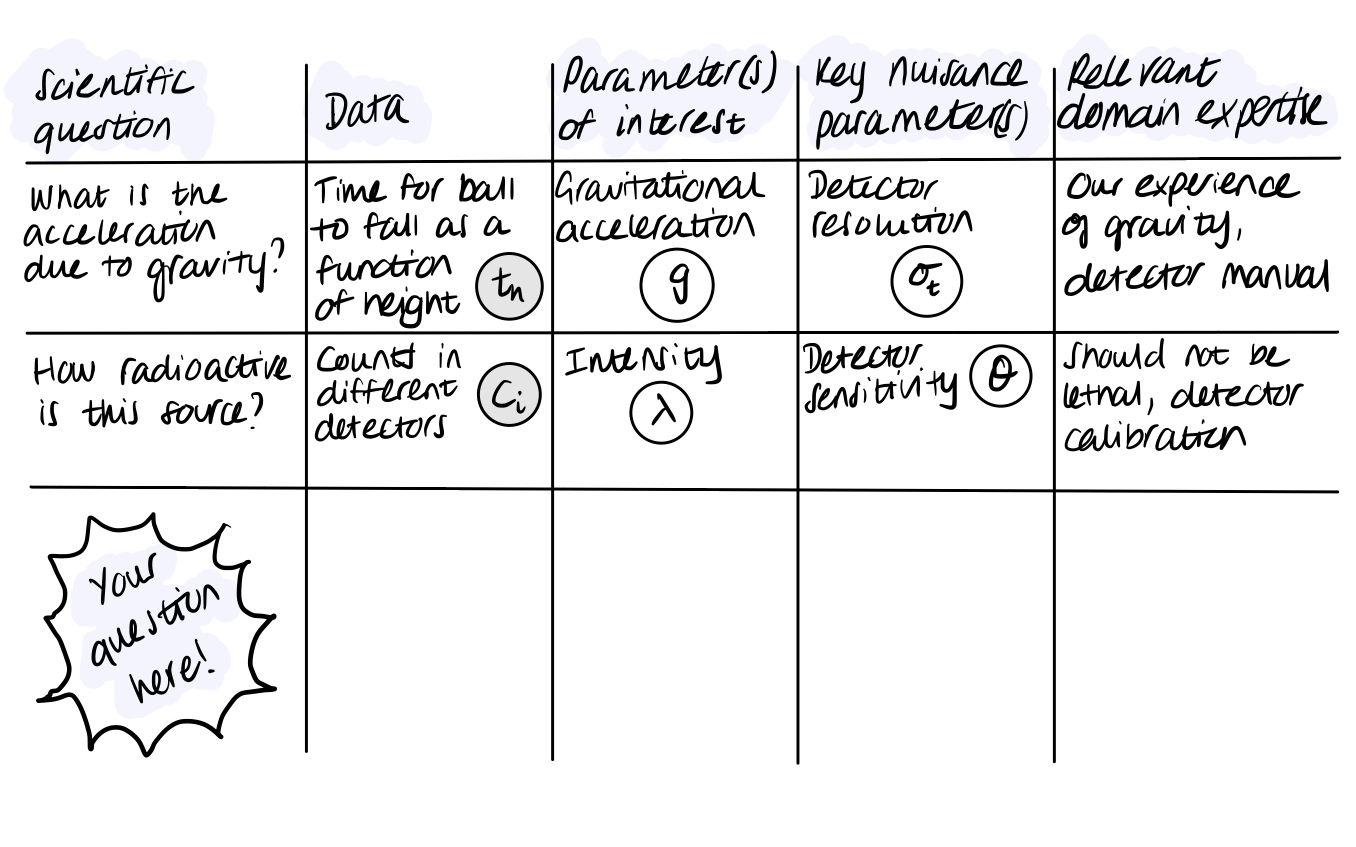

So, model building is very important, but where to start? It can be helpful to break down the problem into the following components:

Scientific question

Data

Parameter(s) of interest

Key nuisance parameter(s)

Relevant domain expertise

The model-building problem then comes down to defining a connection between your parameters and data (the likelihood/observational model) and incorporating relevant domain expertise (the prior model).

To get a feel for how this can work, consider the following examples:

Exercise 1 (10 points): Fill out this table for some more complicated examples such as:

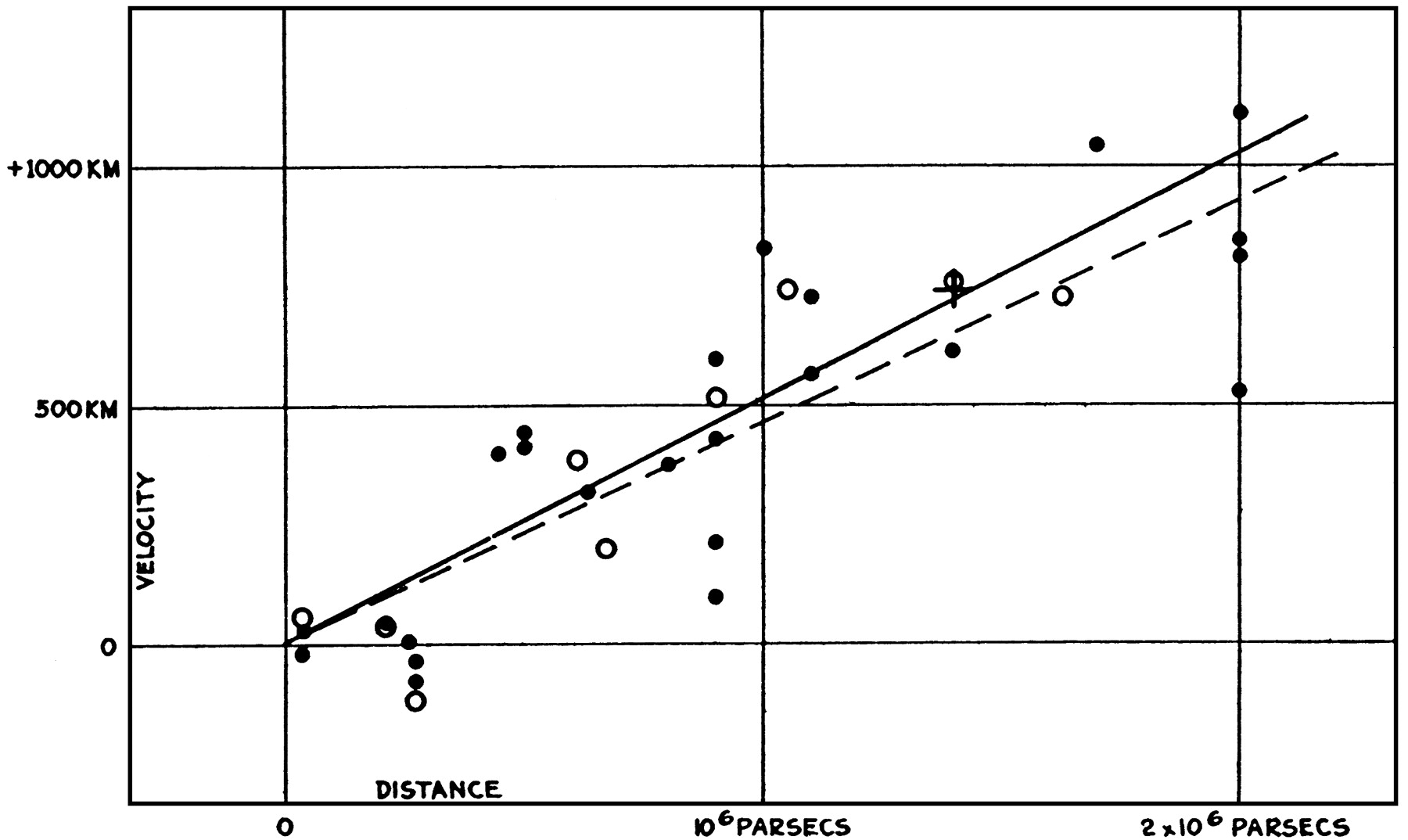

What is the value of the Hubble constant?

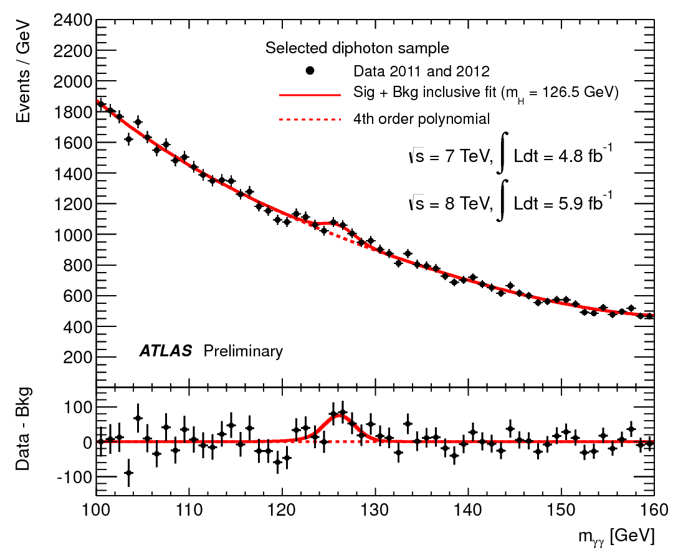

What is the mass of the Higgs boson?

An example from your own field/interests Below are some relevant plots of data used to make such measurements.

The original Hubble diagram from this paper:

First presentation of ATLAS Higgs to \(\gamma\gamma\) results on 4th July 2012:

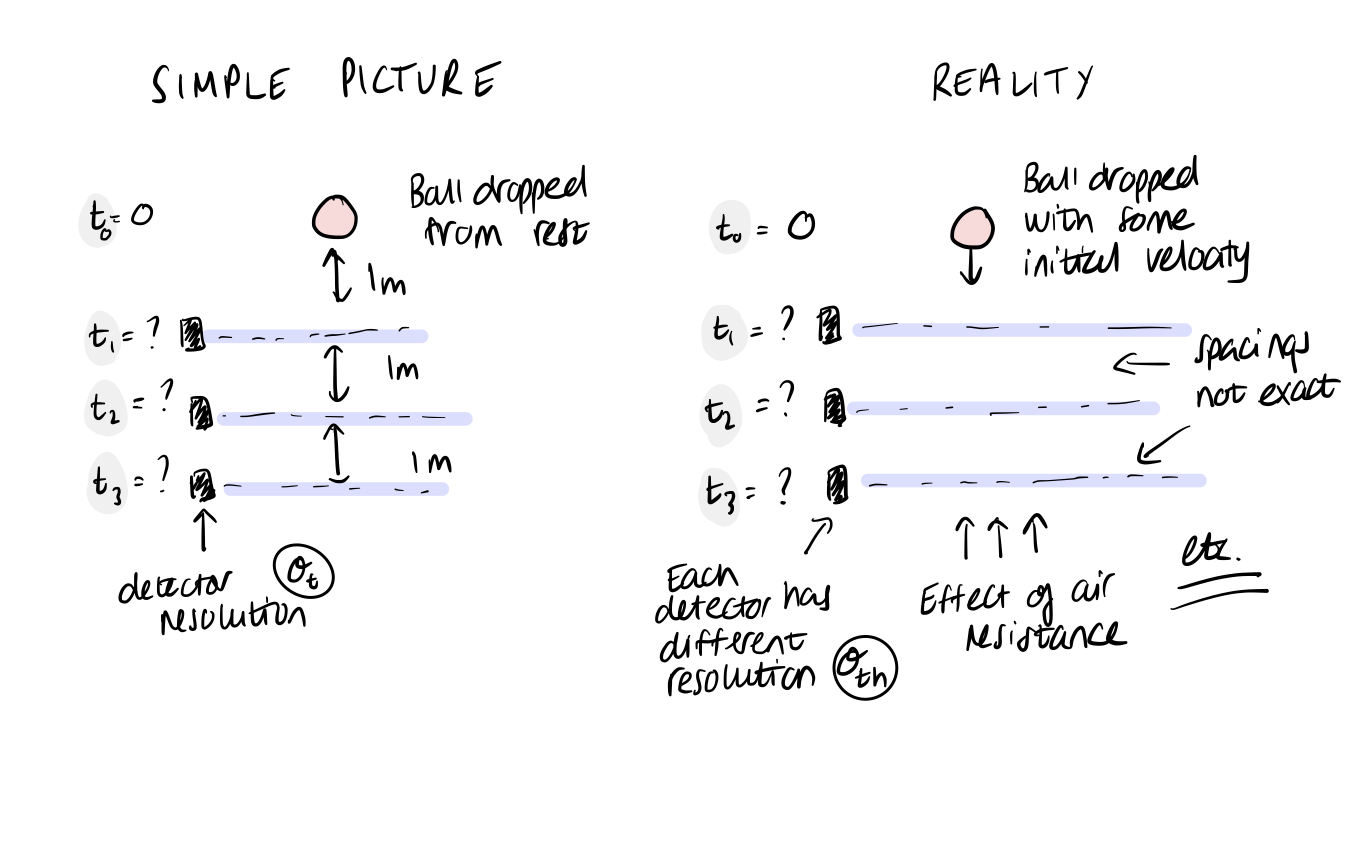

The impossible model

When starting the model-building process, it can also be helpful to think about the ideal model that you would fit if you had no restrictions on model complexity or computational resources. The purpose of this is to consider each step of the data generating process in detail, from start to finish. We are forced to think about complexities of the physical system and measurement process that may or may not be important for our later inference. The model itself may be impossible to actually write down or implement, but in doing this, we give can identify important steps and give context to the simpler models we will later go on to create.

Taking the first example in the table above, consider what other effects may be important…

Exercise 2 (5 points): Can you expand the complexity for one of the other models that we discussed? List possible factors.

Probabilistic graphical models

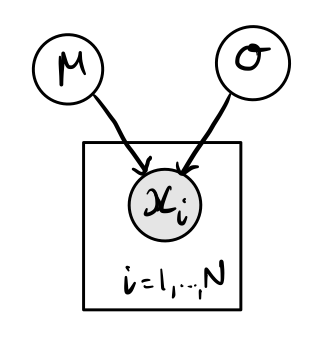

For both of the above steps, it can be useful to sketch out connections between data and parameters using directed acyclic graphs (DAGs), often also called probabilistic graphical models (PGMs). These can be thought of as a visualisation of the data generating process, with parameters in unshaded circles, data or observations in shaded circles, and the arrows representing the connections between them.

Here is a trivial example for that of data sampled from a normal distribution, such as what we looked at when introducing Stan in the Are you ready? section of the introduction notebook. The normal distribution is defined by two parameters, \(\mu\) and \(\sigma\). \(N\) independent samples from this distribution are then taken, shown by \(x_i\). The box around \(x_i\) shows that there are a number of repeated samples, that we can think of as observations or measurements.

Exercise 3 (5 points): Now it’s your turn! Sketch graphical models for the first two examples in the above table (measurement of gravitational acceleration and radioactivity).

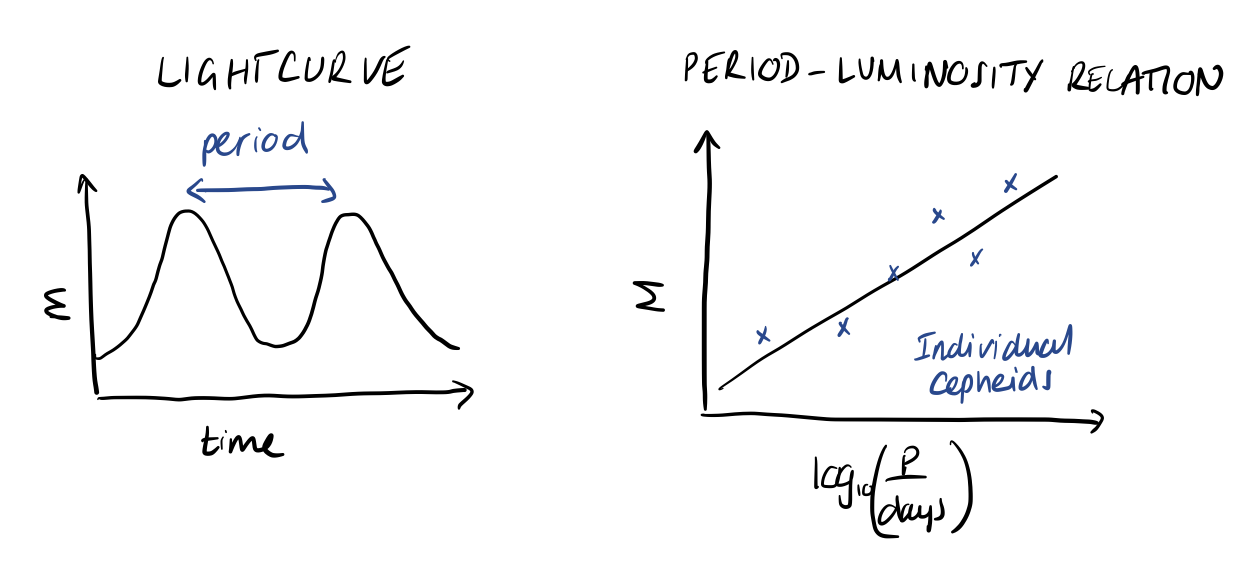

An example: the period-luminosity relation of Cepheid variable stars

Cepheid variables are a type of star that pulsates in observed flux, such that the apparent magnitude of the star varies in time with a well-defined period and amplitude. This behaviour is interesting in its own right, but also because it has been observed that there is a strong correlation between this period and the intrinsic luminosity of these stars. This period-luminosity relation has the form

where \(M\) is the absolute magnitude, related to the apparent magnitude, \(m\), by the luminosity distance, \(d_L(z)\)

In astronomy, it is common to work with magnitudes instead of flux and luminosity, so the above expression is basically the equivalent of \(F = L / 4 \pi d_L^2(z)\). The magnitude is related to the logarithm of flux/luminosity and it is important to remember that magnitude decreases with increasing brightness, although we typically invert the y-axis when plotting magnitude for easier interpretation. So, intrinsically brighter Cepheids have longer periods.

We know this because we can measure the distance to nearby Cepheids using parallax. By determining the period-luminosity relation, we can then measure the distance to Cepheids that are further away, extending the cosmic distance ladder. Measuring distances to far away objects allows us to compare their luminosity distance and redshift, giving us an estimate of the Hubble constant.

In the coming notebooks, we will explore a series of scientific questions related to Cepheid variable observations:

What is the nature of the period-luminosity relation?

Is the relationship universal? (i.e. the same in different galaxies)

What is the value of the Hubble constant?

Generative modelling

Using what we know about Cepheid variables and the period-luminosity relation, we can start to build a simple initial model for what we think could be the data-generating process.

Towards an initial model

Measuring the period of a Cepheid is relatively straightforward from studying its lightcurve, and so to start with we can assume that the period is a known quantity. However, the observed characteristic apparent magnitude, \(\hat{m}\), of a Cepheid can be more tricky to reconstruct exactly, so let’s say that it is reported with some fixed uncertainty, \(\sigma_m\), that is the same for all Cepheids. We can assume that

where \(m\) is the true apparent magnitude that is not observed. Knowing that our measured Cepheids are going to be pretty nearby (\(z < 0.01\)), we can approximate the luminosity distance as

where \(c\) is the speed of light, and \(H_0\) is the Hubble constant. Again, for simplicity, let’s assume that for now we just look at \(N_c\) Cepheids in a single galaxy with a known redshift, \(z\).

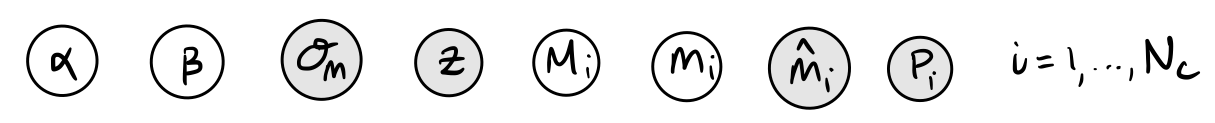

Exercise 4 (5 points): With these simplifying assumptions in mind, sketch the corresponding graphical model for our initial Cepheid variable model. The parameters and known quantities are summarised below, just arrange them into a graph.

Exercise 5 (10 points): Using the parameter and fixed quantity values given below, as well as your graphical model, write a simulation for the observed apparent magnitudes, \(m_i\). Plot your results in \(\log_{10}(P/\mathrm{days})\)-\(m\) space, comparing with the true period-luminosity relation.

[1]:

import numpy as np

from matplotlib import pyplot as plt

[2]:

# known quantities

c = 3.0e5 # km/s

H0 = 70 # km/s/Mpc

z = 0.005

Nc = 10

P = [71.5, 45.0, 19.9, 30.6, 8.8, 75.2, 21.5, 22.0, 34.5, 87.4] # days

sigma_m = 0.5

# high-level parameter values

alpha = -2

beta = -4

Recall:

[3]:

# define some useful functions

# dL: luminosity distance

def luminosity_distance(z):

# to be completed...

pass

# M: absolute magnitude

def absolute_magnitude(alpha, beta, P):

# to be completed...

pass

# m: apparent magnitude

def apparent_magnitude(M, z):

# to be completed...

pass

[4]:

# simulate the observed apparent magnitudes

# M_true = ...

# m_true = ...

# m_obs = ...

# M_obs = ...

pass

[5]:

# plot the true and observed apparent magnitudes

log10_P_grid = np.linspace(0, 3)

fig, ax = plt.subplots()

#ax.scatter(log10P, m_true, label="m_true")

#ax.scatter(log10P, m_obs, label="m_obs")

ax.invert_yaxis() # so brighter as y-axis increases

ax.legend()

ax.set_xlabel("log10(P/days)")

ax.set_ylabel("m")

No handles with labels found to put in legend.

[5]:

Text(0, 0.5, 'm')

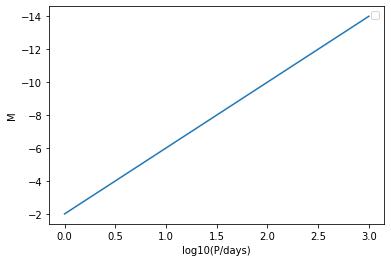

[6]:

# plot the true and observed absolute magnitudes, compare with true P-L relation

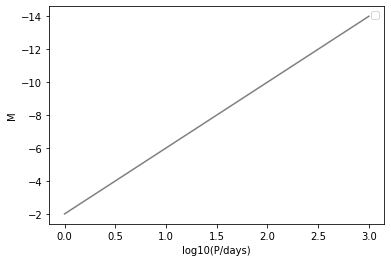

log10P_grid = np.linspace(0, 3)

fig, ax = plt.subplots()

#ax.scatter(log10P, M_true, label="M_true")

#ax.scatter(log10P, M_obs, label="M_obs")

ax.plot(log10P_grid, alpha + beta * log10P_grid)

ax.invert_yaxis() # so brighter as y-axis increases

ax.legend()

ax.set_xlabel("log10(P/days)")

ax.set_ylabel("M")

No handles with labels found to put in legend.

[6]:

Text(0, 0.5, 'M')

In the above plots, you may have been tempted to use ax.errorbar() or plt.errorbar() to show the data, m or M together with the uncertainty, sigma_m.

Note: The uncertainties on

Mandmare equivalent, due to the additive relationship between the two.

This can be a helpful visualisation, and you are free to do so if you wish! However, it is important to remember that in the Bayesian way of thinking, data do not have errors, rather, the model has uncertainty. So the observed magnitude is not uncertain, we saw it! What is really uncertain is what it implies for the true magnitude, that we cannot see.

To get a feel for this, let’s consider the last step in our generative model - the connection between the true and observed magnitudes - in isolation for a moment. We can reverse our simulation for this step, and add the distribution of possible true magnitudes to the above plot for the given observed magnitudes:

Note: We can use Bayes’ theorem to find a proportional relationship for p(

M_true|M_obs) given P(M_obs|M_true) and assuming P(M_true) = constant. This is intended as an illustration. It is important to note that we will need to perform a fit including our entire model to get the full P(M_true|M_obs) in this context, as we will see in the next notebook.

[7]:

fig, ax = plt.subplots()

N_sims = 250

for i in range(N_sims):

# to be completed...

# run "reverse" simulation

# ax.scatter(log10P, M_true, color="r", alpha=0.01)

pass

#ax.scatter(log10P, M_obs, label="M_obs", color="g") # will not change per sim

ax.plot(log10P_grid, alpha + beta * log10_P_grid, color="k", alpha=0.5)

ax.invert_yaxis() # so brighter as y-axis increases

ax.legend()

ax.set_xlabel("log10(P/days)")

ax.set_ylabel("M")

No handles with labels found to put in legend.

[7]:

Text(0, 0.5, 'M')

Now you should see a nice distribution of possible M_true values for each known M_obs. If we do want to plot errorbars, we can think of them as marking out the standard deviation of these M_obs distributions.

Exploratory data analysis

Now that we have some feeling for the generative model, let’s take a look at some Cepheid variable data. You can find two files in the data/ directory:

galaxies.dat

cepheids.dat

The first contains information on the host galaxies, and the second contains information on the individual Cepheids studied in each host galaxy.

The right time in a workflow for exploratory data analysis can be difficult to define. In general, we want to be aware of how our data looks when designing a model for it, but also not too influenced such that we get carried away and design a model that overfits the data we have and is uncapable of generalising to unseen data. Here, we want to see how our first effort at a generative model stacks up, and also think about directions for improving our model.

Homework exercise 1 (30 points): Complete the following exploratory data analysis.

[8]:

# read in the files

# columns: gal z

galaxy_info = np.loadtxt("data/galaxies.dat")

# columns: gal m_obs sigma_m P[days] delta_logO_H

cepheid_info = np.loadtxt("data/cepheids.dat")

[9]:

# reorganise the data into a dictionary indexed by the galaxy number

galaxies = [int(ngc_no) for ngc_no in galaxy_info[:, 0]]

data = {int(x[0]):{"z":x[1]} for x in galaxy_info}

for g in galaxies:

j = np.where(cepheid_info[:, 0] == g)[0]

data[g]["gal"] = np.array([int(i) for i in cepheid_info[j, 0]])

data[g]["Nc"] = len(data[g]["gal"])

data[g]["m_obs"] = cepheid_info[j, 1]

data[g]["sigma_m"] = cepheid_info[j, 2]

data[g]["P"] = cepheid_info[j, 3]

data[g]["delta_logO_H"] = cepheid_info[j, 4]

data[g]["log10P"] = np.log10(data[g]["P"])

[10]:

# have a look at the data structure for the first galaxy

data[galaxies[0]]

[10]:

{'z': 0.007125,

'gal': array([1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309,

1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309,

1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309,

1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309,

1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309,

1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309,

1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309,

1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309,

1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309, 1309,

1309, 1309, 1309, 1309, 1309]),

'Nc': 104,

'm_obs': array([24.81, 26.05, 26.04, 22.8 , 21.86, 23.45, 23.85, 25.68, 24.6 ,

24.94, 21.34, 25.51, 23.45, 27.49, 24.16, 25.81, 23.9 , 26.28,

22.47, 25.13, 26.4 , 23.37, 24.78, 24.04, 23.86, 23.36, 23.68,

22.48, 22.99, 22. , 26.91, 25.79, 24.11, 23.51, 23.73, 21.48,

25.02, 25.08, 26.24, 25.71, 22.85, 21.75, 23.16, 23.74, 28.62,

26.46, 24.58, 27.17, 26.19, 24.32, 20.03, 23.12, 25.08, 23.71,

21.8 , 28.82, 23.5 , 25.2 , 22.87, 23.52, 25.4 , 23.58, 23.66,

23.02, 21.95, 23.83, 26.71, 28.23, 23.38, 23.38, 26.54, 23.67,

23.03, 24.22, 24.77, 24.19, 23.63, 24.34, 25.71, 22.09, 22.22,

25.38, 24.78, 25.57, 24.74, 23.65, 23.87, 22.16, 27.46, 22.85,

27.57, 28.2 , 24.14, 24.53, 23.56, 23.59, 22.15, 25.75, 21.87,

19.88, 24.36, 24.4 , 25.17, 26.11]),

'sigma_m': array([0.3465, 0.4568, 0.53 , 0.3921, 0.2858, 0.4465, 0.365 , 0.3158,

0.2928, 0.2249, 0.3708, 0.3951, 0.6394, 0.5376, 0.5621, 0.3253,

0.3062, 0.493 , 0.5266, 0.1681, 0.1798, 0.3736, 0.1958, 0.677 ,

0.6026, 0.4121, 0.231 , 0.181 , 0.6874, 0.5242, 0.616 , 0.3323,

0.2505, 0.2797, 0.6141, 0.3838, 0.498 , 0.5834, 0.2518, 0.1477,

0.5397, 0.6768, 0.6723, 0.3943, 0.4793, 0.5398, 0.6414, 0.1973,

0.3435, 0.3503, 0.5174, 0.3549, 0.6149, 0.6082, 0.1421, 0.2811,

0.6878, 0.1214, 0.3954, 0.6714, 0.5863, 0.2766, 0.4577, 0.3587,

0.4554, 0.6363, 0.4324, 0.3957, 0.2916, 0.258 , 0.4254, 0.1494,

0.4814, 0.5778, 0.6728, 0.5108, 0.393 , 0.3912, 0.68 , 0.2268,

0.347 , 0.6938, 0.117 , 0.5208, 0.1151, 0.2925, 0.1441, 0.1365,

0.1668, 0.2016, 0.4766, 0.363 , 0.5985, 0.2439, 0.214 , 0.5271,

0.615 , 0.4354, 0.5227, 0.4631, 0.4355, 0.6162, 0.6519, 0.6098]),

'P': array([ 40.4 , 18.37 , 26.94 , 36.51 , 60.36 , 35.8 , 21.23 ,

21.88 , 27.06 , 25.44 , 42.35 , 23.35 , 22.37 , 10.06 ,

15.77 , 22.25 , 54.26 , 20.12 , 32.54 , 27.51 , 17.33 ,

37.04 , 23.86 , 29.43 , 93.36 , 44.58 , 70.27 , 112.6 ,

91.35 , 37.71 , 17.65 , 16.04 , 25.6 , 56.44 , 9.834,

70.04 , 15.53 , 22.22 , 6.193, 33.97 , 105.1 , 74.57 ,

34.65 , 47.13 , 10.65 , 12.95 , 20.05 , 17.29 , 19.64 ,

21.85 , 90.86 , 49.45 , 19.81 , 26.33 , 52.95 , 3.637,

32.95 , 17.28 , 65.99 , 21.28 , 18.16 , 67.28 , 49.59 ,

140.7 , 20.34 , 25.11 , 23.37 , 8.477, 13.53 , 54.76 ,

14.19 , 46.11 , 70.63 , 49.85 , 45.41 , 79.09 , 68.2 ,

15.05 , 40.09 , 62.46 , 52.6 , 7.514, 25.73 , 9.577,

31.7 , 120.3 , 36.75 , 110.5 , 5.435, 27.44 , 31.83 ,

6.819, 17.39 , 29.19 , 34.22 , 30.37 , 48.6 , 10.2 ,

38.98 , 132.8 , 13.25 , 39.69 , 13.71 , 23.32 ]),

'delta_logO_H': array([ 0.3795 , -0.2468 , 1.159 , -0.3276 , -0.896 , -0.2532 ,

-0.7035 , 0.5588 , 0.2014 , 0.2183 , -1.65 , 0.366 ,

-0.7328 , 0.1837 , -0.4942 , 0.5304 , 0.1846 , 0.22 ,

-0.7342 , 0.1816 , 0.678 , -0.5568 , -0.0891 , -0.2413 ,

0.1106 , 0.3603 , 0.4864 , 0.1157 , 0.06798 , -0.9633 ,

0.9988 , -0.01389 , -0.2523 , 0.3159 , -1.385 , -1.108 ,

-0.4778 , 0.6161 , -0.4173 , 1.155 , -0.2238 , -0.2596 ,

0.6923 , -0.4224 , 1.27 , 0.4487 , -0.1107 , 1.237 ,

0.7325 , -0.2851 , -1.354 , -0.3475 , 0.1892 , -0.4236 ,

-0.7808 , 0.5495 , -0.4174 , 0.1361 , -0.0877 , -0.8897 ,

0.3616 , 0.2815 , 0.1661 , 1.011 , -1.969 , -0.9261 ,

1.118 , 1.114 , -1.365 , 0.03091 , 0.5538 , 0.0539 ,

-0.05804 , 0.2621 , 0.3747 , 0.8287 , 0.4081 , -0.347 ,

0.6551 , -0.5 , -0.797 , 0.01028 , 0.09176 , 0.1988 ,

0.3133 , 1.305 , 0.02101 , -0.005696, 0.3532 , -1.119 ,

2.016 , 0.4762 , -0.7656 , 0.3582 , -0.4937 , -0.3202 ,

-0.5463 , -0.2144 , -1.008 , -1.058 , -0.8279 , 0.536 ,

-0.4936 , 0.1985 ]),

'log10P': array([1.60638137, 1.26410916, 1.43039759, 1.56241183, 1.78074923,

1.55388303, 1.32694999, 1.34004732, 1.43232779, 1.40551711,

1.62685341, 1.36828688, 1.34966598, 1.00259798, 1.19783169,

1.34733002, 1.73447979, 1.30362798, 1.51241755, 1.43949059,

1.23879856, 1.56867098, 1.37767044, 1.46879026, 1.97016084,

1.64914006, 1.84676995, 2.05153839, 1.96070855, 1.57645653,

1.24674471, 1.20520436, 1.40823997, 1.75158701, 0.9927302 ,

1.84534614, 1.19117146, 1.34674405, 0.79190108, 1.53109555,

2.02160272, 1.87256414, 1.53970324, 1.67329744, 1.02734961,

1.11226977, 1.30211438, 1.23779499, 1.29314148, 1.33945144,

1.95837273, 1.6941663 , 1.29688448, 1.42045086, 1.72386596,

0.5607433 , 1.51785542, 1.23754374, 1.81947813, 1.32797162,

1.25911584, 1.82788598, 1.69539411, 2.1482941 , 1.30835095,

1.39984671, 1.36865871, 0.92824218, 1.1312978 , 1.73846344,

1.1519824 , 1.66379512, 1.84898921, 1.69766516, 1.6571515 ,

1.89812158, 1.83378437, 1.1775365 , 1.60303606, 1.79560198,

1.72098574, 0.87587119, 1.41043979, 0.98122949, 1.50105926,

2.08026563, 1.56525734, 2.04336228, 0.73519955, 1.43838411,

1.50283664, 0.83372069, 1.24029958, 1.46523409, 1.53428001,

1.48244479, 1.68663627, 1.00860017, 1.59084183, 2.12319808,

1.12221588, 1.5986811 , 1.13703745, 1.36772855])}

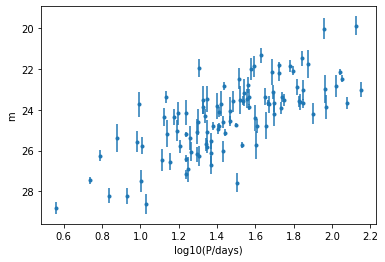

Let’s plot the period vs. the apparent magnitude for a single galaxy. How does it compare to the simulated data?

[11]:

fig, ax = plt.subplots()

g = galaxies[0]

ax.errorbar(data[g]["log10P"], data[g]["m_obs"], yerr=data[g]["sigma_m"], fmt=".")

ax.invert_yaxis()

ax.set_xlabel("log10(P/days)")

ax.set_ylabel("m")

[11]:

Text(0, 0.5, 'm')

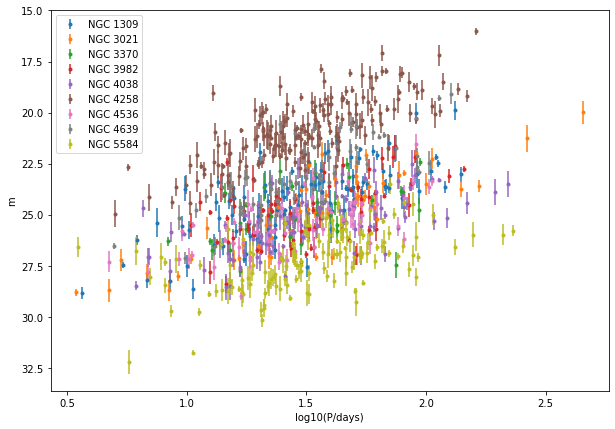

We can immediately see that here there are individual \(\sigma_m\) for each Cepheid, and a somewhat visible linear relationship. Now for all the galaxies…

[12]:

fig, ax = plt.subplots()

fig.set_size_inches((10, 7))

for g in galaxies:

ax.errorbar(data[g]["log10P"], data[g]["m_obs"], yerr=data[g]["sigma_m"], fmt=".",

label="NGC %s" % g)

ax.invert_yaxis()

ax.set_xlabel("log10(P/days)")

ax.set_ylabel("m")

ax.legend()

[12]:

<matplotlib.legend.Legend at 0x1226b9f40>

We can see rough linear relationships for each galaxy, but not a universal one. To better assess this, we should convert to absolute magnitude.

[13]:

# add absolute magnitude to the data

for g in galaxies:

# to be completed...

# data[g]["M_obs"] = absolute_magnitude(...)

pass

[14]:

# plot for absolute magnitude this time

fig, ax = plt.subplots()

fig.set_size_inches((10, 7))

for g in galaxies:

# to be completed...

pass

ax.invert_yaxis()

ax.set_xlabel("log10(P/days)")

ax.set_ylabel("M")

ax.legend()

No handles with labels found to put in legend.

[14]:

<matplotlib.legend.Legend at 0x1228f3ee0>

We should now see a stronger global relation, but still with considerable intrinsic scatter and potential differences between galaxies.

We can see other quantities in the provided files, such as a measure of the metallicity of each star, delta_logO_H.

What do the distributions of

delta_logO_Hlook like for different galaxies? Is there any relationship betweendelta_logO_HandM_obs?

[15]:

# to be completed...

How about the distributions of

Pin different galaxies? Why are some values ofPmore frequently observed than others?

[16]:

# to be completed...

In light of the data, what are some ways in which we may want to expand our model one day?

[17]:

# to be completed... make some bullet points

Further reading

Model building: Towards a principled Bayesian workflow and Falling (in love with principled modeling) by Michael Betancourt

The dataset prepared for this course is based on that reported in Reiss et al. 2011 and used in this example of the KIPAC Statistical Methods course.

[ ]: