4. Model development

Last time we used simulations as well as prior and posterior predictive checks to check and improve upon our model for Cepheids in a single galaxy. We then found the alpha and beta posteriors for each galaxy independently.

In order to use the period luminosity relation to extend the cosmic distance ladder, and eventually estimate the Hubble constant, the relation needs be universal, i.e. the same alpha and beta should hold for all galaxies, within uncertainties.

From our studies of individual galaxies, we should be able to see the similarities and differences between the results. But, how do we combine these results properly, and keep track of the uncertainties or correlations between parameters?

Hierarchical models

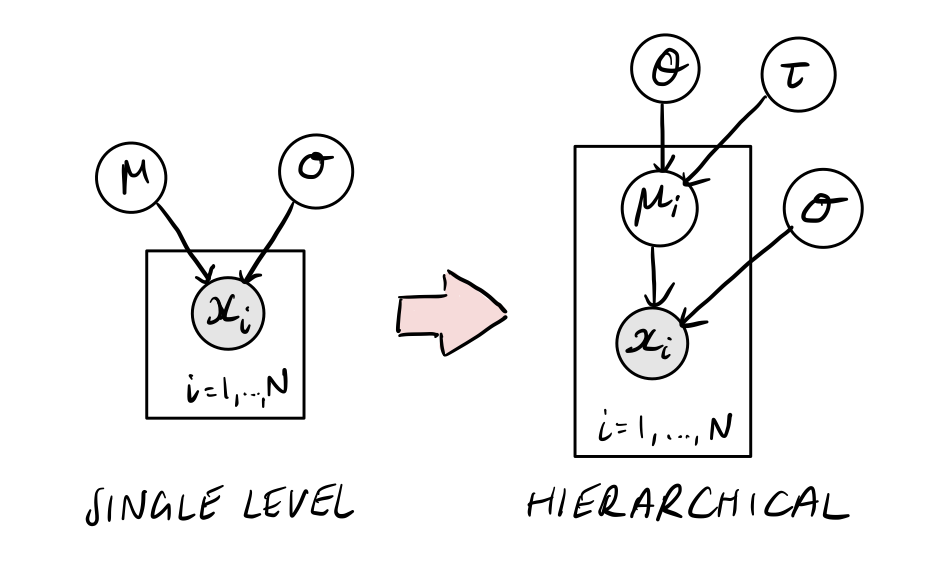

A natural way to combine the inidividual galaxy results is to build a hierarchical model for all galaxies that we have data for. To understand how to do this, we can start with a simpler example of a simple normal model, like the one we used to understand Stan in the introduction notebook.

Imagine that \(\mu\) is now sampled from some parent distribution, with mean \(\theta\) and standard deviation \(\tau\).

Single-level normal model:

Hierarchical normal model:

Hierarchical models are ideal for describing populations of objects with similar properties, like our Cepheid-hosting galaxies. If we let alpha and beta for each galaxy come from parent distributions with means mu_X and standard deviations tau_X, we can then fit for the shape of the universal alpha and beta distributions!

We see that building up hierarchies in this way can quickly lead to a large number of free parameters, which may seem scary. However, Hamiltonian Monte Carlo is designed to cope with these kind of models, and this should be no problem for Stan.

A joint hierarchical fit of all galaxies

Exercise 1 (10 points): Sketch the graphical model for our hierarchical model for Cepheids over all galaxies

A simplified Stan model for the full hierarchy can be found in cepheid_v3.stan. Note that this is based on cepheid_v1.stan, and you should also incorporate the changes that you made during the exercises in the model checking notebook in order to achieve a good fit to the data. I will use cepheid_v3.stan here to demonstrate a few important points about hierarchical modelling.

[1]:

!cat stan/cepheid_v3.stan

/**

* Hierarchical model for Cepheid variables

* - Multiple galaxies

* - Shared sigma_m

* - Weakly informative priors

**/

data {

/* usual inputs */

int Ng; // number of Galaxies

int Nt; // sum(Nc_g)

int gal_id[Nt]; // galaxy id for each entry [1 - 9]

vector[Nt] m_obs; // obs apparent mag.

real sigma_m; // mag uncertainty

vector[Nt] log10P; // log10(periods/days)

vector[Ng] z; // redshift of single galaxy

}

transformed data {

vector[Ng] dL;

/* luminosity distance */

dL = (3.0e5 * z) / 70.0; // Mpc

}

parameters {

/* parameters of the parent distributions */

real mu_alpha;

real<lower=0> tau_alpha;

real mu_beta;

real<lower=0> tau_beta;

/* individual galaxy parameters */

vector[Ng] alpha;

vector[Ng] beta;

}

transformed parameters {

vector[Nt] M_true;

vector[Nt] m_true;

/* P-L relation */

M_true = alpha[gal_id] + beta[gal_id] .* log10P;

/* convert to m */

m_true = M_true + 5 * log10(dL[gal_id]) + 25;

}

model {

/* priors */

mu_alpha ~ normal(0, 10);

mu_beta ~ normal(-5, 5);

tau_alpha ~ cauchy(0, 2.5);

tau_beta ~ cauchy(0, 2.5);

/* connection to latent params */

alpha ~ normal(mu_alpha, tau_alpha);

beta ~ normal(mu_beta, tau_beta);

/* connection to data */

m_obs ~ normal(m_true, sigma_m);

}

A few things worth noting:

The easiest way to deal with a ragged array structure (different number of Cepheids in each galaxy) in Stan is to flatten the array, and pass a vector with the galaxy IDs.

We introduce

muandtauparameters foralphaandbeta, with sensible priors.We assume the parent

alphaandbetadistributions are independent, as a starting point.In a hierarchical model, it is not so clear how to separate the priors and likelihood, the model is more a series of conditional steps.

Now, let’s see how the model performs when fitting our dataset.

[2]:

import numpy as np

import arviz as av

from cmdstanpy import CmdStanModel

[3]:

# load data

galaxy_info = np.loadtxt("data/galaxies.dat")

cepheid_info = np.loadtxt("data/cepheids.dat")

# compile data from all galaxies

galaxies = [int(ngc_no) for ngc_no in galaxy_info[:, 0]]

data = {int(x[0]):{"z":x[1]} for x in galaxy_info}

for g in galaxies:

j = np.where(cepheid_info[:, 0] == g)[0]

data[g]["gal"] = np.array([int(i) for i in cepheid_info[j, 0]])

data[g]["Nc"] = len(data[g]["gal"])

data[g]["m_obs"] = cepheid_info[j, 1]

data[g]["sigma_m"] = cepheid_info[j, 2]

data[g]["P"] = cepheid_info[j, 3]

data[g]["delta_logO_H"] = cepheid_info[j, 4]

data[g]["log10P"] = np.log10(data[g]["P"])

[4]:

# Select out two galaxies

sel = 2

# prepare Stan input

input_data = {}

input_data["Ng"] = len(galaxies[0:sel])

input_data["Nt"] = sum([data[g]["Nc"] for g in galaxies[0:sel]])

input_data["m_obs"] = np.concatenate([data[g]["m_obs"] for g in galaxies[0:sel]])

input_data["log10P"] = np.concatenate([data[g]["log10P"] for g in galaxies[0:sel]])

input_data["gal_id"] = np.concatenate([np.tile(i, data[g]["Nc"])

for i, g in enumerate(galaxies[0:sel])]) + 1

input_data["z"] = galaxy_info.T[1][0:sel]

input_data["sigma_m"] = 0.5

[5]:

stan_model = CmdStanModel(stan_file="stan/cepheid_v3.stan")

INFO:cmdstanpy:found newer exe file, not recompiling

INFO:cmdstanpy:compiled model file: /Users/fran/projects/BayesianWorkflow/src/notebooks/stan/cepheid_v3

[6]:

fit = stan_model.sample(data=input_data, chains=4, iter_sampling=1000)

INFO:cmdstanpy:start chain 1

INFO:cmdstanpy:start chain 2

INFO:cmdstanpy:start chain 3

INFO:cmdstanpy:start chain 4

INFO:cmdstanpy:finish chain 3

INFO:cmdstanpy:finish chain 1

INFO:cmdstanpy:finish chain 2

INFO:cmdstanpy:finish chain 4

We should always check the sampler diagnostics first!

[7]:

fit.diagnose();

INFO:cmdstanpy:Processing csv files: /var/folders/8d/cyg0_lx54mggm8v350vlx91r0000gn/T/tmpomivi5y3/cepheid_v3-202109111349-1-i76uz3kv.csv, /var/folders/8d/cyg0_lx54mggm8v350vlx91r0000gn/T/tmpomivi5y3/cepheid_v3-202109111349-2-sdcvnxp4.csv, /var/folders/8d/cyg0_lx54mggm8v350vlx91r0000gn/T/tmpomivi5y3/cepheid_v3-202109111349-3-mp1dna27.csv, /var/folders/8d/cyg0_lx54mggm8v350vlx91r0000gn/T/tmpomivi5y3/cepheid_v3-202109111349-4-ywx326xl.csv

Checking sampler transitions treedepth.

Treedepth satisfactory for all transitions.

Checking sampler transitions for divergences.

208 of 4000 (5.2%) transitions ended with a divergence.

These divergent transitions indicate that HMC is not fully able to explore the posterior distribution.

Try increasing adapt delta closer to 1.

If this doesn't remove all divergences, try to reparameterize the model.

Checking E-BFMI - sampler transitions HMC potential energy.

E-BFMI satisfactory.

Effective sample size satisfactory.

Split R-hat values satisfactory all parameters.

Processing complete.

[8]:

fit.summary()

[8]:

| Mean | MCSE | StdDev | 5% | 50% | 95% | N_Eff | N_Eff/s | R_hat | |

|---|---|---|---|---|---|---|---|---|---|

| name | |||||||||

| lp__ | -450.0 | 0.1100 | 2.600 | -450.00 | -450.00 | -440.0 | 560.0 | 340.0 | 1.0 |

| mu_alpha | -1.3 | 0.0630 | 1.800 | -3.90 | -1.30 | 1.6 | 800.0 | 490.0 | 1.0 |

| tau_alpha | 2.4 | 0.0890 | 2.500 | 0.61 | 1.70 | 6.4 | 800.0 | 490.0 | 1.0 |

| mu_beta | -4.2 | 0.0340 | 0.980 | -5.70 | -4.10 | -2.9 | 820.0 | 500.0 | 1.0 |

| tau_beta | 1.0 | 0.0680 | 1.900 | 0.06 | 0.47 | 3.4 | 760.0 | 470.0 | 1.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| m_true[158] | 28.0 | 0.0038 | 0.150 | 28.00 | 28.00 | 29.0 | 1508.0 | 925.0 | 1.0 |

| m_true[159] | 25.0 | 0.0014 | 0.068 | 25.00 | 25.00 | 25.0 | 2472.0 | 1516.0 | 1.0 |

| m_true[160] | 25.0 | 0.0014 | 0.068 | 25.00 | 25.00 | 25.0 | 2445.0 | 1499.0 | 1.0 |

| m_true[161] | 27.0 | 0.0025 | 0.100 | 27.00 | 27.00 | 27.0 | 1707.0 | 1046.0 | 1.0 |

| m_true[162] | 24.0 | 0.0018 | 0.074 | 24.00 | 24.00 | 24.0 | 1625.0 | 996.0 | 1.0 |

333 rows × 9 columns

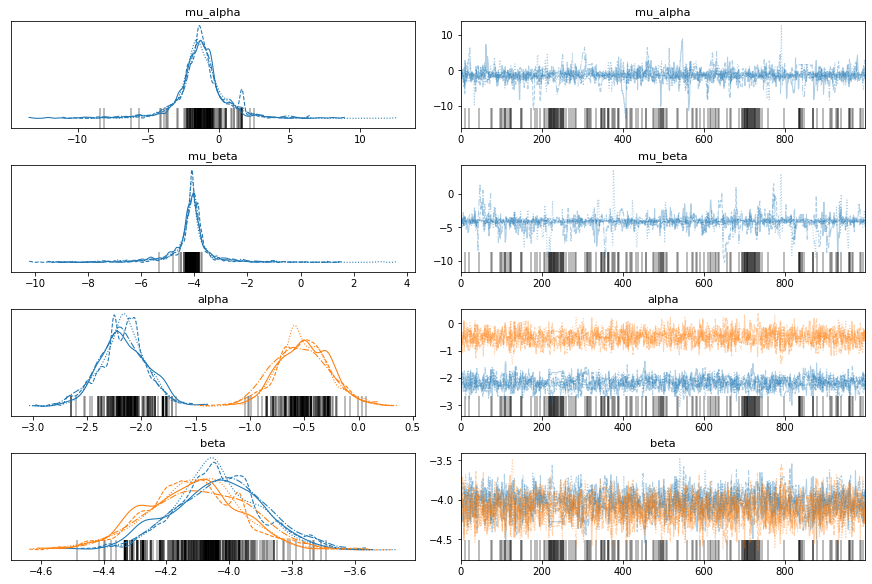

Hmmm, it seems something is off. Let’s check the trace and pair plots…

[9]:

av.plot_trace(fit, var_names=["mu_alpha", "mu_beta", "alpha", "beta"]);

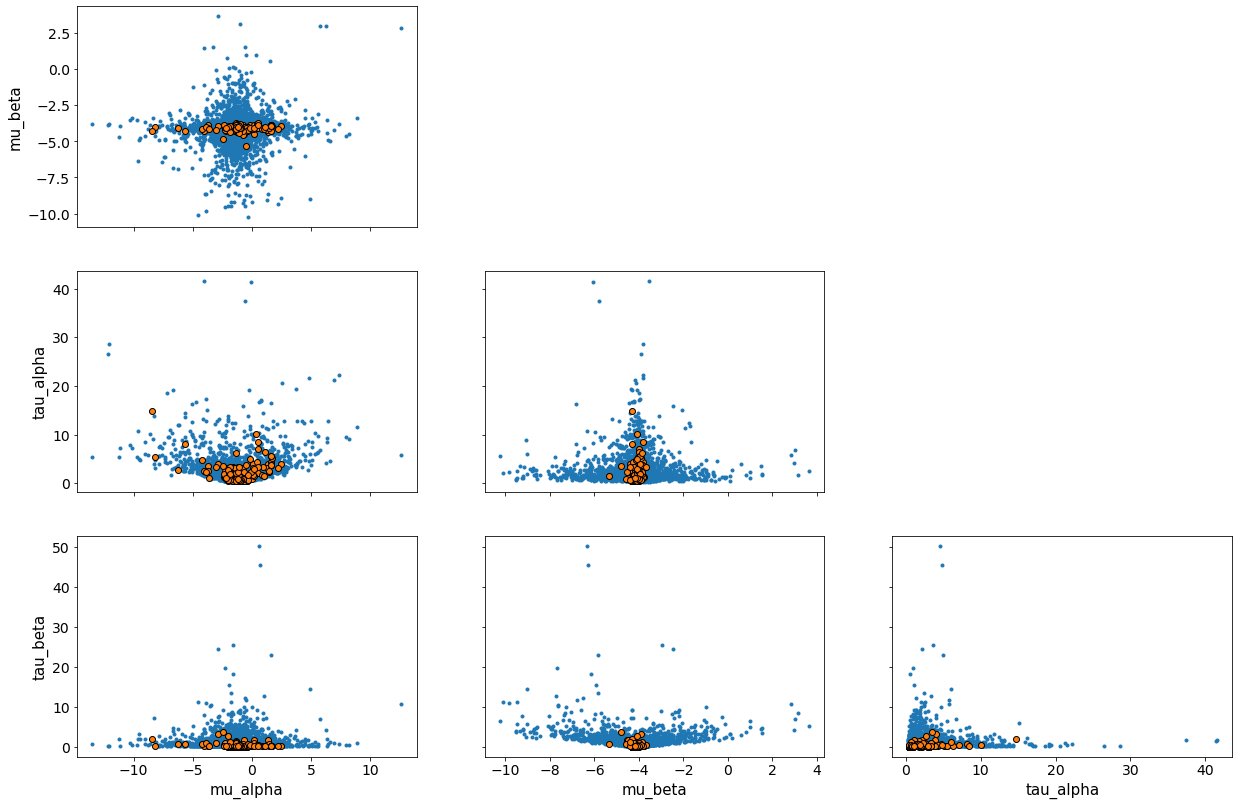

To make a sensibly-sized pair plot and view divergent transitions, we have to pass some more information to arviz. Let’s focus on the high level parameters now, seeing as these are the new things we have introduced. The Ng and Nt indicies are also specified, in case you want to use arviz to explore some other cases.

[10]:

fit_a = av.from_cmdstanpy(fit, coords={"Ng" : np.arange(input_data["Ng"]),

"Nt" : np.arange(input_data["Nt"])},

dims={"alpha": ["Ng"], "beta": ["Ng"], "M_true":["Nt"]})

av.plot_pair(fit_a, var_names=["mu_alpha", "mu_beta", "tau_alpha", "tau_beta"],

divergences=True, coords={"Ng": np.array([0, 1]), # Ng coords

"Nt": np.array([0])}); # Nt coords

The geometry of a hierarchical model with its many latent parameters is much more complex than that of a single model, and divergent transitions can occur. Fortunately, these can be used to help us understand and fix our model.

Here, we can see that the misspecification of the model causes problems, although these issues become less servere if we add data from more galaxies, as I’ll now demonstrate. It is important to remember that the geometry the sampler sees depends on both the model and the data.

Exercise 2 (5 points): Update the above Stan code with your improvements from the single galaxy case that you carried out in the model checking notebook and display the code. Verify that you can run the above demo to fit all galaxies with no divergent transitions.

Note: To start with, you can experiment with adding the reparametrisation and/or individual

sigma_minto the model. If you complete the homework in the model checking notebook, you’ll also figure out some other updates that you could use to get a better fit to the data.

[13]:

# to be completed...

Exercise 3 (10 points): Perform posterior predictive checks for your improved fit, and visualise the posterior predictive distribution of your fit. Can you fit the data well?

[12]:

# to be completed...

Homework exercise 1 (10 points): Compare the galaxy-level alpha and beta distributions to those found from individual fits that you completed as part of the model checking notebook. What differences can you see?

[ ]:

# to be completed...

Homework exercise 2 (20 points): Make a plot to show how the constraints on the parent distributions for alpha and beta change depending on the number of galaxies used in the sample. What happens when we only use one or two galaxies?

[ ]:

# to be completed...

Estimation of the Hubble constant

So far we have assumed a value for H0 in our fits in order to convert between apparent and absolute magnitude. This is necessary to fit for alpha and beta. Let’s think about how we can extend the model to make an estimate of H0, rather than assuming it.

Exercise 4 (5 points): What happens if we simply leave H0 as a free parameter in our existing hierarchical model? Why is this?

[ ]:

# to be completed...

Exercise 5 (5 points): What happens if we change the value of H0 assumed in our analysis? Which results change, and which stay the same?

[ ]:

# to be completed...

The Cepheid variable experts that we talked to earlier are impressed with our careful analysis of the period-luminosity relation. They share a new dataset of Cepheid variable stars that are in our own Galaxy, the Milky Way. The distances to these stars are well-measured using geometric methods.

This dataset is given in milkyway_cepheids.dat. It similar to that we have seen before, except that each Cepheid has a distance measurement in kpc. We are also told that the uncertainty in the distance measurements is 150 pc, and that on the apparent magnitudes is 0.1, for all Cepheids. Any effect due to metallicity can be assumed to be negligible here.

Let’s take a look at the data:

[ ]:

mw_cepheids = np.loadtxt("data/milkyway_cepheids.dat")

d = mw_cepheids.T[0] # distance in kpc

m_obs = mw_cepheids.T[1] # apparent mag

P = mw_cepheids.T[2] # period in days

Homework exercise 3 (20 points): Use this new data set to make an independent estimate of alpha and beta, without assuming H0, what do you find?

[ ]:

# to be completed...

Homework exercise 4 (20 points): Update the hierarchical model that you have to include key information from the calibration data into your joint fit. If done correctly, this should allow you to break the degeneracy in the model and directly estimate the Hubble constant. Try to include all relevant uncertainties.

Hint: Assume that the mean

alphainferred from the Milky Way dataset is fair estimate ofmu_alpha.

Homework exercise 5 (20 points): What factors affect the uncertainties in your estimate? How could you get a more constrained result?